Redefining Data Stewardship: How Computational Governance Aligns with DataOps and Modern Architecture

In the fast-paced world of modern data systems—data lakes, real-time AI, and DataOps—traditional Data Governance is undergoing a radical transformation. The rise of computational data governance marks a shift from manual, rigid policies to automated, real-time, and agile frameworks that align seamlessly with DataOps, CI/CD, and the data product lifecycle. By integrating test-driven development (TDD), shift-left testing, and data contracts, computational governance empowers organizations to manage data products effectively while fostering robust data stewardship. This article explores how these concepts converge to redefine governance, debunking the myth that it’s “100% opposite” to agile practices like DataOps and CI/CD.

What is Computational Data Governance?

Computational data governance is the automated, code-driven enforcement of data policies and quality standards that operates at the speed and scale of modern data systems. Unlike traditional governance, which relies on manual audits and static rules, computational governance embeds intelligent controls directly into data pipelines, operating in real-time to ensure compliance and quality across complex data ecosystems like data lakes. It’s a proactive, engineering-first approach that treats governance as code, enabling organizations to maintain data integrity while moving at DataOps velocity.

Key features include:

- Policy-as-Code: Governance rules (e.g., access controls, data quality standards) are codified and version-controlled using tools like Apache Ranger or Databricks Unity Catalog.

- Real-Time Observability: Continuous monitoring of data quality, lineage, and compliance using observability platforms like Monte Carlo or WhyLabs, providing the transparency essential for DataOps.

- Metadata-Driven Intelligence: Rich metadata capture enables automated policy enforcement, lineage tracking, and impact analysis across the data product lifecycle.

- AI-Driven Insights: Machine learning predicts risks (e.g., data drift, bias) and suggests policy refinements, ensuring governance evolves with data.

This computational approach is critical in environments where data lakes ingest diverse, high-velocity data for AI, bypassing traditional Dev/Test/QA due to cost and complexity. The observability principles of DataOps—continuous monitoring, transparent metrics, and rapid feedback loops—become the foundation for governance that doesn’t impede innovation but enables it. Rich metadata capture ensures that every data transformation, quality check, and policy enforcement is traceable, creating the engineering rigor necessary for enterprise-scale data operations.

The Data Product Lifecycle and Stewardship

Data products—curated datasets, analytics dashboards, or AI models—are the outputs of DataOps, designed to deliver business value. The data product lifecycle (ingestion, transformation, validation, delivery, and iteration) requires robust governance to ensure reliability, compliance, and trust. Data stewardship, the practice of managing data as a strategic asset, underpins this lifecycle by defining responsibilities, ensuring quality, and aligning data with business goals. However, without engineering rigor and clear metadata standards, even the best stewardship practices fail to scale.

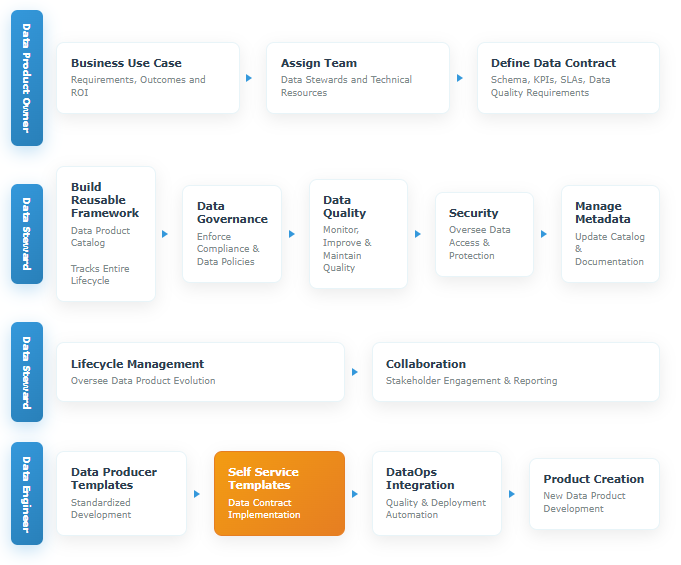

See Also: See Data Product Lifecycle Diagram

Strategy in Action: Data Product Operating Model

Our advisory services aren't theoretical — they're embedded directly into a modular, metadata-driven platform. See how data stewardship, governance, and domain-driven design come together in our operational model for trusted data delivery.

Computational governance enhances stewardship by:

- Automating Oversight: Stewards define policies that are automatically enforced across the lifecycle, reducing manual effort while maintaining comprehensive metadata lineage.

- Enabling Iteration: Real-time observability supports continuous refinement, ensuring data products remain reliable as they evolve through DevOps-style iterations.

- Fostering Collaboration: Stewards bridge data engineers, analysts, and compliance teams, aligning them on governance goals through shared metadata standards and observable metrics.

Integrating Test-Driven Development (TDD)

Test-driven development (TDD) adapts naturally to data engineering when combined with robust metadata management and observability principles. In computational governance, TDD means writing tests for data quality, schema compliance, and governance policies before building data pipelines—creating a foundation of engineering rigor that scales with DataOps velocity.

For example:

- Great Expectations allows stewards to define data quality tests (e.g., no null values, consistent formats) before building ETL pipelines in a data lake.

- dbt Tests validate transformations during development, ensuring data products meet governance standards before deployment.

TDD ensures data products are trustworthy from the start, reducing errors in production and aligning with DataOps’ iterative ethos. Governance professionals can use TDD to codify policies as automated tests, making compliance a proactive part of the lifecycle.

Shift-Left Testing for Early Validation

Traditional testing in Dev/Test/QA environments is often impractical for data lakes due to their scale and dynamic nature. Shift-left testing addresses this by moving validation earlier in the development process, embedding tests into the design and build phases of data pipelines. This approach requires comprehensive metadata management and observability to track what’s being tested, when, and why—essential for maintaining engineering rigor at DataOps speed.

In computational governance:

- In-Line Testing: Tools like Soda or Monte Carlo run automated data quality checks during ingestion and transformation, with rich metadata capture ensuring every test result is traceable and actionable.

- CI/CD Integration: Shift-left tests are part of CI/CD pipelines, validating data and code changes incrementally in tools like Databricks or Snowflake, with observability dashboards providing real-time feedback.

- Cost Efficiency: Early validation reduces the need for costly production fixes, aligning with DataOps’ focus on speed while maintaining the engineering rigor that CTOs and VPs demand.

Shift-left testing ensures governance policies (e.g., PII masking, data lineage) are enforced early, enhancing stewardship by preventing downstream issues.

Data Contracts for Lifecycle Clarity

Data contracts are formal agreements defining the structure, quality, and governance requirements of data products. They act as a blueprint, ensuring data producers and consumers align on expectations throughout the data product lifecycle. More importantly, they embed metadata standards and observability requirements directly into the data architecture, creating the foundation for engineering rigor that scales.

For example:

- A data contract might specify that a dataset must have no null values, adhere to GDPR, include comprehensive lineage metadata, and provide observability metrics for monitoring data freshness and quality.

- Tools like OpenAPI for data or schema registries enforce contracts automatically, with metadata-driven validation ensuring compliance as data moves through pipelines.

Data contracts facilitate stewardship by:

- Clarifying Responsibilities: Stewards use contracts to define ownership and accountability, with metadata lineage making these relationships transparent and traceable.

- Enabling Automation: Contracts are integrated into computational governance tools, automating validation and enforcement while maintaining comprehensive observability.

- Supporting Iteration: Contracts evolve with data products through version control, ensuring governance keeps pace with changes while maintaining the engineering rigor necessary for enterprise scale.

Change Management: The Human Element

The shift to computational governance, TDD, shift-left testing, and data contracts requires cultural and operational transformation. For CTOs and VPs leading data organizations, up-skilling governance professionals in Change Management is non-optional. This transformation demands engineering rigor applied to organizational processes, treating change management itself as a data product with measurable outcomes and observable metrics.

Change Management ensures:

- Tool Adoption: Teams embrace tools like Great Expectations or Unity Catalog through training and communication.

- Cultural Alignment: Stakeholders align on automated governance, overcoming resistance to new workflows.

- Skill Development: Governance professionals learn to manage real-time systems and AI-driven tools, bridging traditional and computational approaches.

Change Management fosters a culture where governance is seen as an enabler of agility, not a barrier, debunking the misconception that governance is “100% opposite” to DataOps and CI/CD.

Why Computational Governance Aligns with DataOps

The perception that Data Governance is antithetical to DataOps and CI/CD stems from traditional governance’s manual, rigid nature. Computational governance resolves this by:

- Embedding Controls: Automated policies and tests are part of DataOps pipelines, ensuring compliance without slowing delivery.

- Real-Time Agility: Real-time monitoring and testing align with DataOps’ continuous refinement, supporting rapid iteration.

- Scalability for AI: Computational governance handles the dynamic needs of AI, ensuring data lakes deliver high-quality, compliant data for model training and inference.

For example, a data lake feeding an AI fraud detection model can use computational governance to enforce real-time quality checks, detect bias, and maintain audit trails, all while supporting DataOps’ speed.

Challenges and Opportunities

While computational governance is transformative, executive leaders must navigate specific challenges:

- Complexity: Automated systems require deep technical expertise to design and maintain, demanding investment in both tools and talent development.

- Bias in AI: Governance tools must address algorithmic bias in real-time, using metadata-driven flagging and contesting mechanisms that maintain audit trails.

- Regulatory Flux: Evolving regulations (e.g., EU AI Act) demand flexible governance frameworks that can adapt through metadata-driven policy updates.

Strategic opportunities for data leaders include:

- Innovation Acceleration: Automated governance with robust metadata and observability frees teams to focus on data product innovation rather than compliance overhead.

- Cost Optimization: Shift-left testing and data contracts reduce production errors, lowering total cost of ownership while improving data product reliability.

- Trust Building: Transparent, real-time governance with comprehensive metadata lineage enhances stakeholder trust in data products and AI systems.

Conclusion

Computational data governance redefines stewardship for the DataOps era, integrating TDD, shift-left testing, and data contracts to manage the data product lifecycle with engineering rigor. By embedding automated policies, comprehensive metadata management, and observability principles into data lake pipelines, it ensures compliance and quality without sacrificing agility. Change Management is critical to drive adoption, equipping governance professionals to champion this evolution while maintaining the technical depth that modern data systems demand.

Far from being “100% opposite” to DataOps, computational governance is its essential enabler. The imperative for data leaders is clear: we must get our data moving at the speed of DevOps, and this takes engineering rigor and clarity around the data product lifecycle and stewardship. Organizations that master this integration will deliver trusted, high-value data products at scale, while those that don’t will find themselves outpaced by competitors who’ve embraced governance as a competitive advantage rather than a compliance burden.

About the Author

Brian Brewer, CTO of InfoLibrarian™, brings over 20 years of consulting experience, leading SMBs and enterprises to architectural success. His Metadata Value Method™ emerged from this journey, now driving the company’s modernization mission. Learn more about my journey in my story or our history on the company page to see how this transformation began.